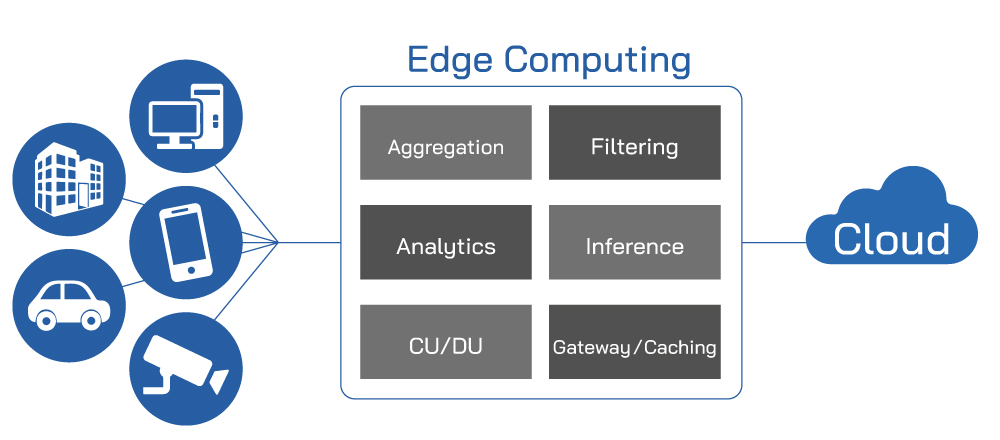

Multi-access Edge AI Computing, or MEC, as defined by ETSI (European Telecommunications Standards Institute) has been a trending topic for a while, and these edge servers are being looked at by some of the builders of technologies for tomorrow.

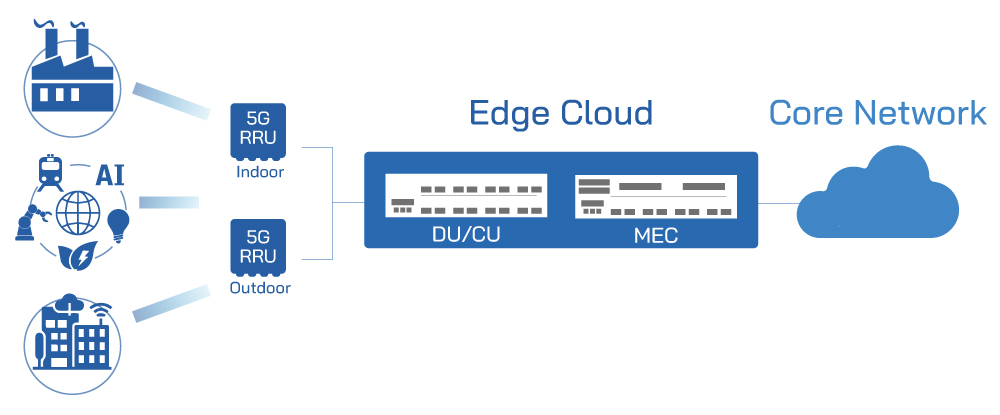

The AIM of MEC is to provide edge services directly on or near cellular network radios. This opens up a whole new world of applications, as well as hardware requirements to match. To allow 3rd party applications running on the Radio Access Network (RAN), there are some infrastructural changes required for the mobile operators.

First of which is transformation to allow multi-tenancy on the RAN hardware. Leading the change is utilizing vRAN (virtual RAN), built upon the same hardware abstraction technologies as VNF and other virtualized systems, and running on top of a hypervisor to enable multiple isolated virtual machines.

Another area that needed advancement is the management and orchestration (MANO) software to deploy and manage the vRAN and authorized 3rd party VMs to host additional applications. There are changes to the hardware as well, as the industry is moving toward genericized x86 platforms to avoid vendor lock-in. Additional processing power and storage may be required to enable new applications or content caching.

Putting it all together, MEC aims to enable new applications running very close to new virtualization optimized RAN hardware to offer the lowest latency and reducing traffic on the core networks. AEWIN is doing its part to enable deployments in this area. We are working with partners to implement O-RAN compliant MEC servers in the 5G arena, utilizing the RU (Radio Unit) and combined CU (Centralized Unit) / DU (Distributed Unit) architecture suitable for large mobile operators to smaller 5G private networks. The secret sauce is the Xilinx FPGA providing acceleration to ensure the highest bandwidth to 5G users.

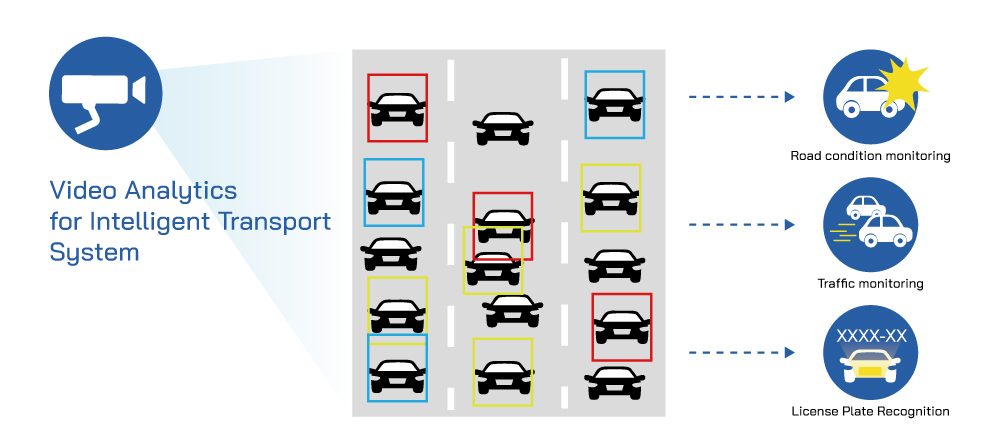

A key addition to enhance computation powers of edge servers is with FPGA and GPUs. These accelerators have the potential for many fields, such as high-performance gaming, data/network traffic analytics, compression to save network bandwidth, IoT sensor data analytics, connected cars, and even real time AI inference workloads. AEWIN has been diligently working with partners to enable the latter.

Using AI and GPU accelerators for inferencing has been going on for the past few years, however, the emergence of edge servers made the wide deployment of these real time AI platform possible. AEWIN and partner has been utilizing edge servers to actualize real-time road traffic analytics. Real world application for this technology is traffic management and planning. Although traffic pattern data is already being collected by transportation planners, the use of AI can offer more data and ways to analyze the data. Instead of basic speed and throughput, AI can also track distance traveled, identifying points of slowdowns, dangerous road conditions, high accident segments, and more. The eventual deployment of these will help city and traffic planners to build more efficient and safer public roadway system.

There are a multitude of potential usage for computing power right at the edge, however, there is not a specific killer-app. Some are still trying to make heads or tails on what to do with computing power so close to the edge. To start, instead of thinking about what it can do for your business but start thinking about what sort of benefit you can bring to your customers. what services you offer will have improved customer experience by having lower latency or enabling new applications that can draw in new customers.

Thinking this way can starting down the path of building a sustainable and profitable edge computing deployment and we cannot be stuck on the “if you build it, they will come” mentality. These new services will have to have tangible benefits for your users. A topical example of this is video streaming and CDNs. Fast instant access to high definition 4k videos is a boon to those of us preferring a night out on the couch instead of a night out due to the continuation of the pandemic.

Direct access to libraries worth of movies and tv shows without worries of network bandwidth or latency issues is a tangible benefit. Think about what value you can bring to the customer, and what they are willing to pay for, then building a monetization structure around these improved services. Figuring out the right model for requires a lot of thinking and hypotheticals and unique for every company’s product mix and we’re looking forward to talk to you about your applications!

|

|

- Support Intel Xeon D-2100 (Skylake-D) processors

- Support 2400/2666 MHz System Memory

- 2x PCIe x8 for NIC, GPU or FPGA, optional 1x PCIe x16

- Support Intel® QAT (by SKU)

|

|

|

- Support Dual 2nd Gen Intel Xeon Scalable Processors

- Support 2400/2666MHz System Memory

- 8x PCIe x8 for NIC, GPU or FPGA, optional 4x PCIe x16

|

|

|

- Support 2nd Gen AMD® EPYC™ 7002 (Rome) processors

- 8-channel DDR4 3200MHz memory

- 4x PCIe x8 for NIC, GPU or FPGA, optional 1x PCIe x16 + 2x PCIe x8

|

Rear more:

Mobile Edge AI Computing/Multi-access Edge Computing

AEWIN have the right solution for your Edge AI Computing