Deliver AI on AEWIN Edge Servers

We’re experiencing the dawn of Age of Edge AI, as Nvidia CEO and founder, Jensen Huang details his vision at the recent GTC event. AI continues to be the hot topic in the news and blogosphere. According to Intel research, 75% of enterprise applications will use AI by 2021. This creates a large demand for GPU servers to host these new enterprise focused applications. AEWIN, being a network appliance specialist, has tangible advantages versus traditional datacenter server makers trying to miniaturize their servers to fit in the constraints of edge deployment with the twist of added TDP of GPU accelerators.

Our network focused designs is Edge ready, being compact and have higher temperature design requirements by default. The front access design allows GPUs access to coolest air, helping to keep the GPU at peak performance no matter where it is deployed. Our designs have been refined over generations, compared to datacenter focused manufacturers who are validating their designs for the first time without the trials of scaled deployments. With AEWIN, you’re buying a robust and tested design without the hassle of being the test case for the manufacturer.

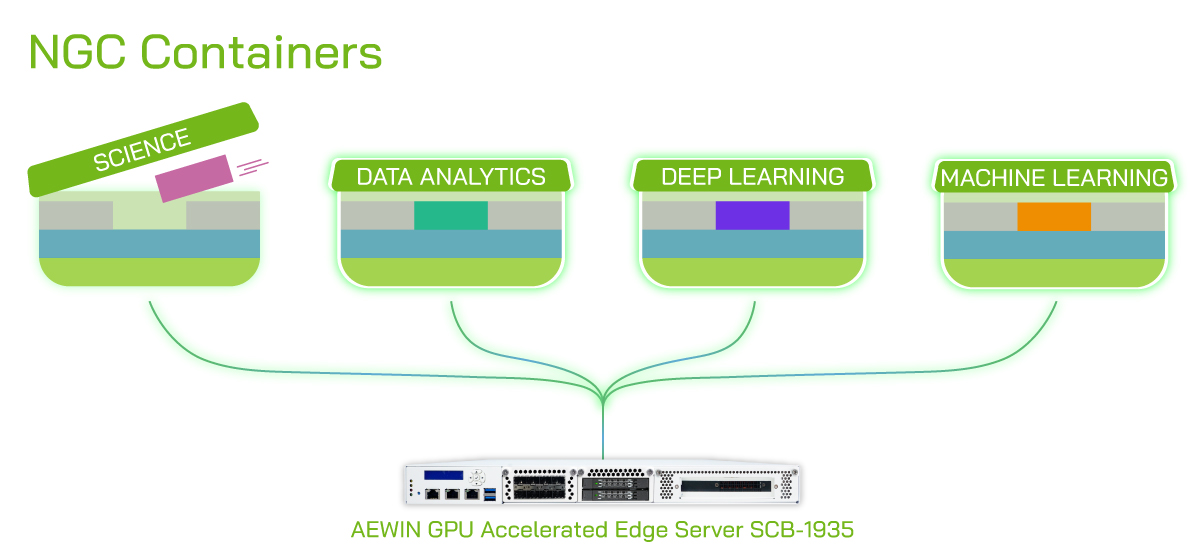

As exemplified in our previous blog post (Video Analytics – Fine Tuning for Big Gains), we’re not satisfied with just changing a few mechanical bits to accommodate GPUs. We are intensely benchmarking through NGC containers and fine tuning firmware to offer the best performance possible. All of our experience working with NVIDIA NGC container platforms are available for your projects. Our goal is enable our customers to quickly deliver AI on AEWIN edge servers. It is the title of this blog post! As a member of AEWIN Edge Servers team, I am hugely excited to finally let the fruits of our labor be known. Please don’t be shy and come talk to us about GPU powered edge servers.

Read more:

AEWIN announce the latest addition to our edge AI focused portfolio

What is Edge AI?How about Edge AI application?

AEWIN have the right solutions for your Edge AI computing needs.