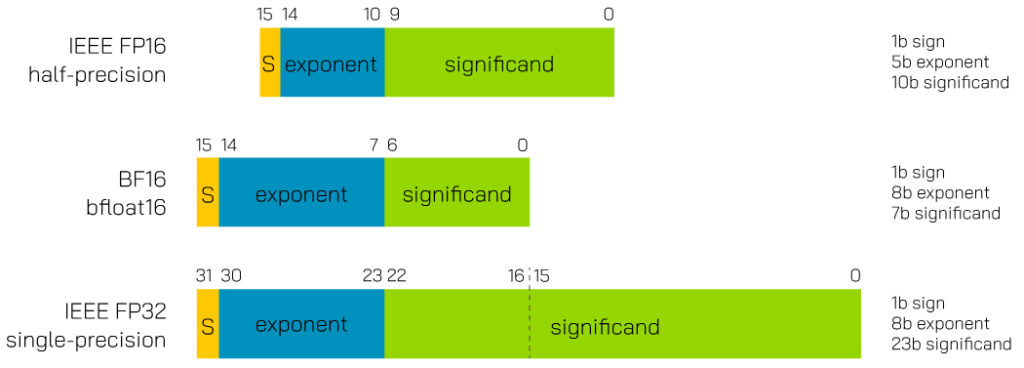

AI calculation is computationally expensive, especially with the larger number sets of working with FP32. Half precision FP16 floating point has only 8bit precision significand with 5bit for exponent which has reduced precision and rangeof represented numbers compared to FP32.

BFloat16 developed by Google specifically toaddress the specialized need of AI, where it requires a large range of numbers with less requirement on the precision of the significand. BFloat16 is essentially a FP32 with truncated significand bringing the performance of FP16 with the dynamic range of FP32 while using half the memory. Working with BF16 has the benefit of easy conversion with existing FP32 data and truncating it to BF16 for further neural processing. BF16 offers enough precision, no more. It is the right tool for the job.

BF16 bfloat16 :

1bit for sign

8bit for exponent

2-127 minimum positive value to 2128 maximum positive value(exponent has zero offset of 127).

7bit for significand

We’re on the edge of the Age of AI. Having a common and speedy implementation of number format targeted for AI will accelerate us in the right direction. Industry has gotten on board for the BFloat16 format, with support across a wide range of hardware platforms. Google, the inventor of the format has their own TPU (tensor processing unit) available on the cloud. Nvidia, the de facto leader of AI accelerators, has also embraced bf16 and has implemented into their latest Ampere based silicon in the tensor cores. CPU giant Intel has specialized solution in Nervana accelerators, as well as integrating BF16 into their AVX-512 extension for specific usage where there is less reliance on AI and the reduced workload can be taken care by the CPU itself. ARM has also integrated bf16 into their SVE and Neon instructions. This is significant in that ARM v8 is that it is in use in wide range of platforms, from mobile all the way up to infrastructure.

Table 1 – Selected hardware list for BFloat16 support

| CPU |

Support |

| 1st & 2nd Generation Intel® Xeon® Scalable Processors |

no |

| 3rd Generation Intel® Xeon® Scalable Processors (Cooper Lake) |

yes |

| GPU |

Support |

| Nvidia Volta (V100) |

no |

| Nvidia Turing (T4) |

no |

| Nvidia Ampere(A100) |

yes |

| AMD Radeon RX6000 |

no |

| AMD Radeon Instinct |

yes |